Last 2 weeks in multimodal ai art (05/Jul - 19/Jul)

First big text-to-video model out (CogVideo), more out on text-to-3D, 'image editing with text' getting better - and a bunch of community trained diffusion models

Two weeks accumulated for the last bit of my vacations! But it was worth the wait - a lot of amazing news!

Text-to-video updates

Text-to-3D (and 3D-to-text) updates

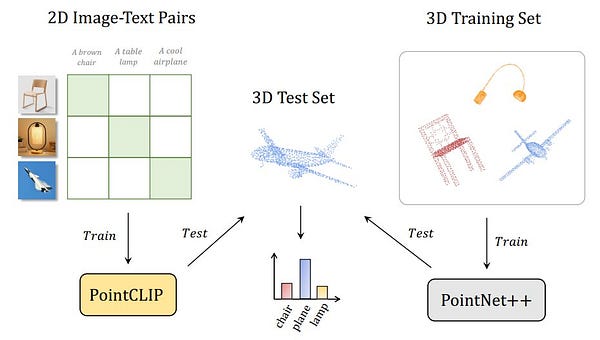

CLIP-Actor released - generate moving 3D avatars*, Pulsar+CLIP released - generate 3D point clouds*, PointCLIP released - classify 3D point-clouds*

Text-to-image updates

CF-CLIP released - edit images with words*, CLIP2StyleGAN released - transform images with words*, 6 community trained diffusion models*

CLIP and CLIP-like models

Education

* code released

Text-to-video updates:

- CogVideo released (GitHub, Guided Demo, Run it yourself - paying $1.10/h for the compute)

by THUDM

The most powerful open source text-to-video model is now publicly avaliable. However running it is still a challenge as it requires only commercial grade GPU machines to run.

Text-to-3D (and 3D-to-text) updates

- CLIP-Actor released - generate moving 3D avatars (GitHub)

by AMI Lab @ POSTECH

- Pulsar+CLIP released - generate 3D point clouds (Colab)

by nev (@apeoffire)

- PointCLIP released - classify 3D point-clouds (GitHub)

by Renrui Zhang

Text-to-image updates:

- CF-CLIP released - edit images with words (GitHub)

by Yingchen Yu

- CLIP2StyleGAN released - transform images with words (GitHub)

by @AbdalRameen

- 6 community trained Diffusion Models (Watercolor, Lithography, Medieval, Handpainted CG, Ukiyo-e Portraits, Liminal Spaces)

Watercolor, Lithography and Medieval by @KaliYuga_ai, Handpainted CG by @FeiArt_AiArt, Ukiyo-e Portraits by @avantcontra, Liminal Spaces by @JohnWowCool

CLIP and CLIP-like models:

- Chinese-CLIP released (GitHub)

by Junshu Pan

A CLIP model in the chinese language, modest performance but with huge potential